When approaching the field of human-machine interaction (HMI), especially with personal assistants, it is important to take into account a more “relational” dimension. The various speech recognition technologies, among many other innovations, are constantly improving their functionalities to promote their adoption by the public. Thus, talking to a voice assistant should be as natural as talking to a human. It is from this observation that we are going to present to you what we, and many experts, consider to be one of the essential elements of these new technologies: speech to emotion.

Emotion recognition, the next step in the human-machine relationship.

Today, voice assistants, like other technologies in the field, are gaining ground among different target audiences. Through numerous functionalities which, by nature, are easier and more intuitive for the user, these new tools are moving from a “Nice-To-Have” to a true “Must-Have” in many areas. However, according to Sophie Kleber, Huge’s Executive Director, this is far from being enough to unleash the full potential of the human/machine conversation.

In a conference entitled “Designing emotionally intelligent machines“, she presents her vision of the advent of the voice interaction mode, while specifying that this will be combined with the emergence of Affective Computing. That is to say that to create strong relationships with humans, systems will have to be able to recognize, interpret, use and simulate emotions.

This vision is also shared by many specialists in the field, including Viktor Rogzik, researcher at Amazon’s Alexa Speech Group division : “Emotional recognition is an increasingly popular research topic in the field of artificial intelligence dedicated to conversation. Developing speech technology will inevitably involve the emotional dimension, the first work in this area has already been done, but what constitutes the state of the art still has a long way to go. We explain why.

Emotion, why is it so hard to exploit?

As with many cognitive technologies (based on the functioning of the human brain), it is very hard to effectively reproduce certain complex processes. Indeed, language and interpretation are two fields with countless exceptions and peculiarities. For example, irony or other stylistic devices such as euphemisms alter the actual meaning of a sentence and require some very contextual interpretation. Thus, micro-expressions, voice modulations, etc. are all elements to be taken into account in order to fully grasp the intentions, conscious or not, of the users. It must be understood in this sense that basing oneself solely on words is a very big mistake, the one made in the first works on the subject (where a simple positive or negative coefficient was attributed according to the meaning of the words).

There are also other obstacles such as:

- Emotions are subjective, their interpretation can vary widely. It is indeed very hard to really define an emotion.

- What should be taken into account for the recognition of an emotion? The meaning of a single word, a set of words or an entire conversation?

- Collecting data is very complicated. There are many, even huge amounts, yet it is hard to find reliable data about emotions. For example, TV news is presented in a neutral way which does not offer treatable data, actors mimic emotions which creates bias in identification.

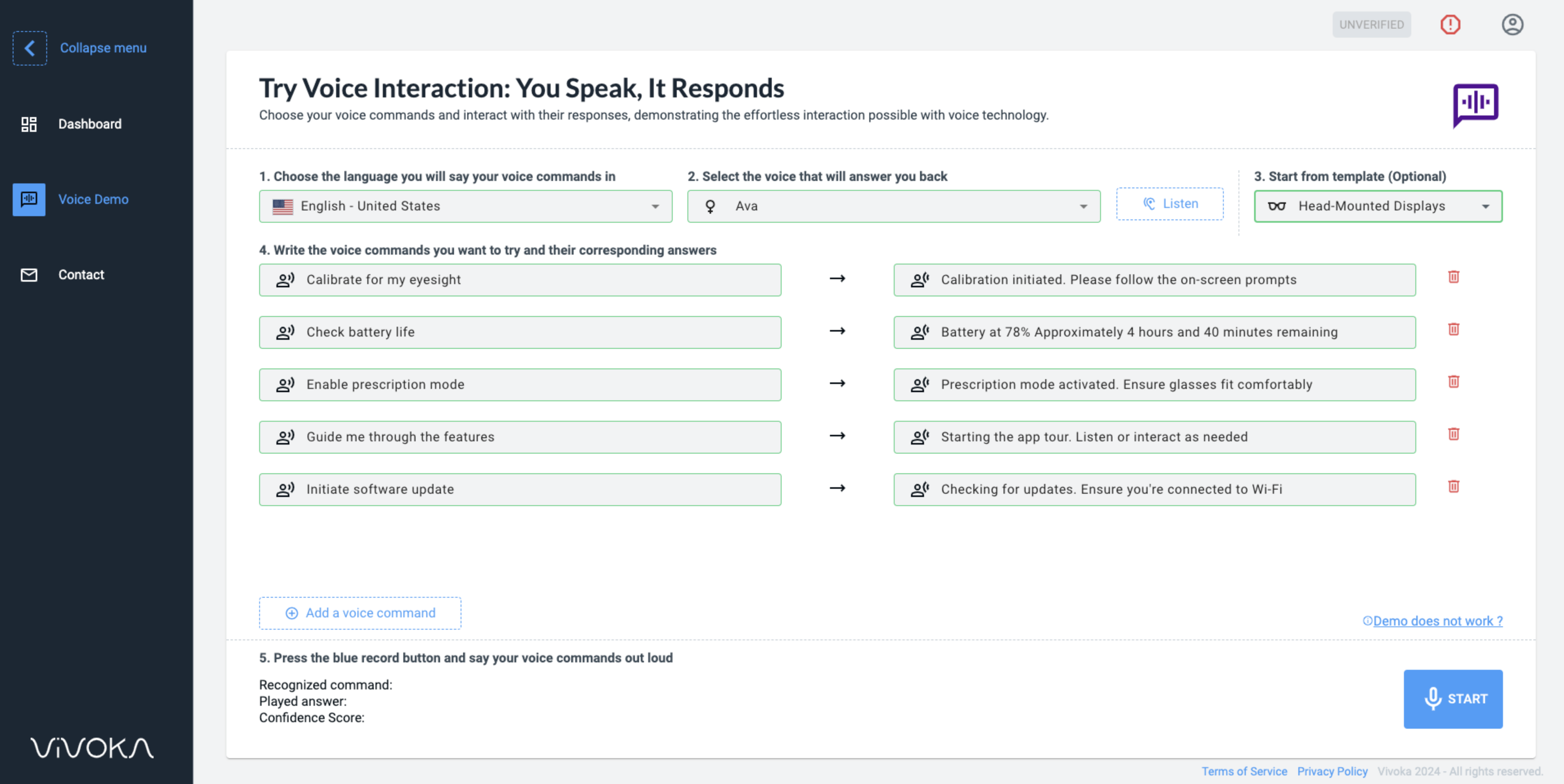

How to take advantage of Speech to Emotion?

This is surely the part that interests you the most, and the most pragmatic! First of all, it is a feature that is expected to be essential for the future of voice assistants! “We believe that in the future, all our users will want to interact with assistants in an emotional way. This is the trend we see in the long term”, said Felix Zhang, Vice President of Software Engineering at Huawei, CNBC.

Tomorrow, it will be possible to couple Speech-To-Emotion (STE) engines with Natural Language Understanding (NLU) & Processing (NLP) systems to actually identify and interpret the emotions in a conversation or speech. This opens up a very wide field of applications! For example, it will be possible to further personalize services to the user according to the emotions he feels. What better way to improve the experience than to adapt the result according to what the user feels?

We talk a lot about what voice can offer in terms of experience. To exploit this dimension, there is more than just the user’s voice. Are you familiar with TTS (Text-To-Speech)? These are speech synthesis engines that allow you to create quasi-human voices from text. The voice of the SNCF or RATP comes from there! To make a link with what we were saying before, using a TTS personalised according to the emotion identified is an additional track to a Human-Machine link developed.

The future of voice systems thus lies in emotion recognition. This type of emotional intelligence is on its way to bringing the human even closer to the machine. However, this is still the manipulation of personal data. Thus, voice profiling must remain with a view to improving the experience and to best respect the guidelines of the RGPD and other principles of privacy protection.