Beyond Naïve RAG: Advanced Retrieval for Conversational AI

on Long Documents

With the rise of powerful Large Language Models (LLMs) and the rapid adoption of Generative AI, intelligent question-answering over long, complex enterprise documents is emerging as a transformative capability. From voice assistants guiding field technicians as they troubleshoot machinery in real time, to AI-driven customer support systems and financial assistants that interpret dense reports, these applications are unlocking new levels of productivity across industries.

However, deploying LLMs to answer conversational questions from vast proprietary data sources is not a trivial task. While the growing capabilities of long-context models make it tempting to load them with all available internal documentation, real-world enterprise environments introduce challenges—ranging from data relevance, security to latency and cost efficiency.

While long-context LLMs, such as ChatGPT (128K tokens), Gemini 2.0 Pro (2M tokens), Llama 4 (10M tokens), and others can process large inputs, they come with trade-offs — higher inference cost, hallucinations, lack of traceability, diminishing accuracy when dealing with scattered information, scalability limitations, amongst others. They also suffer from the “needle-in-the-haystack” problem, where a model struggles to retrieve a critical piece of information (the “needle”) buried within extensive, less relevant text (the “haystack”). Therefore, simply relying on large context window size and feeding LLMs all available data is not a silver bullet – it’s a brute-force approach to a problem that demands smarter solutions.

To tackle this challenge, enterprises require AI systems that retrieve and integrate relevant proprietary knowledge into LLM responses, without sacrificing cost, scale, or accuracy. Retrieval-Augmented Generation (RAG) meets this need by pulling context-specific data—like relevant snippets from manuals or logs—at query time to deliver accurate, domain-aligned responses.

Naïve RAG methods like keyword or vector searches often fail in real-world use. Users rarely write perfect queries—conversations shift, details are missing, and relevant information is often buried deep within lengthy documents. This leads to frustrating user experiences and inefficiencies in mission-critical tasks.

To build truly effective AI assistants, we need advanced retrieval strategies that go beyond basic lexical or semantic matching.

Why Long-Context Models Alone Fall Short?

Even with LLMs like GPT-4o, Claude 3.5 Sonnet, DeepSeek-R1, Gemini 2.0 Pro, Llama 4, and others boasting massive context windows—ranging from 128K to 10 million tokens—stuffing entire documents into their context often proves inefficient for enterprise settings.

Hallucinations & Traceability

LLMs often hallucinate—generating plausible-sounding but incorrect or unverifiable information—while lacking traceability. RAG addresses this by grounding outputs in retrieved, verifiable sources. Linking each answer to specific documents enables auditing and makes RAG-based systems more reliable and enterprise-ready.

The “Needle-in-the-Haystack” Problem

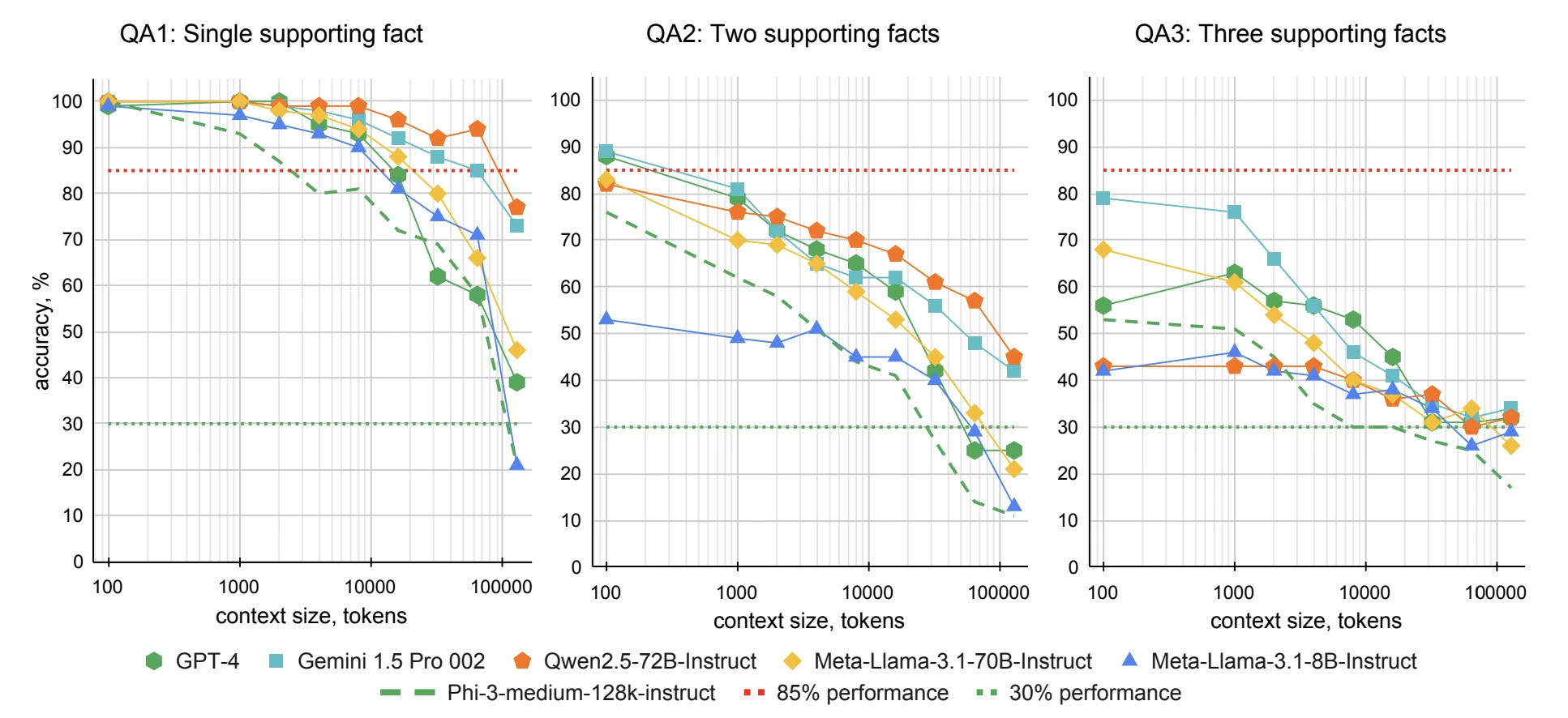

LLMs struggle to prioritize relevant information in long inputs. Studies like BabiLong and NoLIMA show that model performance drops when supporting facts are scattered or phrased differently. BabiLong finds LLMs effectively use only 10–20% of the context when reasoning over distributed facts. Therefore, feedFuging more text doesn’t ensure better results—targeted retrieval is essential.

Figure taken from Babilong

Higher Inference Cost and Scalability

Long-context models process every token, making full-document inputs costly and inefficient. For enterprises managing massive volumes of datasets, this approach doesn’t scale. Efficient retrieval strategies are, therefore, necessary to extract only the most relevant information from distributed knowledge bases. This reduces input size, cutting costs and improving scalability.

Modularity & Debugging

Long-context LLMs, while powerful, often behave like black boxes, offering little room to diagnose issues when things go wrong. Without modular components to isolate and inspect, troubleshooting becomes challenging. RAG adds flexibility and control, helping trace problems and improve overall system reliability.

Regulatory & Privacy Constraints

LLMs aren’t permission-aware. Feeding them unrestricted documents risks exposing sensitive data. RAG solves this by integrating with fine-grained authorization systems like AuthZ.

When a query is issued, the system can map the user’s attributes to the metadata and access policies tied to each data element, such as individual sections or fields within documents. This enables the system to retrieve only those elements relevant to the query that the user is authorized to see. The result: stronger data governance, protection of sensitive content, and highly relevant responses without compromising security.

Pitfalls of Naïve RAG over Long Documents

Naïve RAG splits documents into chunks, converts them into vector embeddings, and stores them in a vector database. When a query is made, it’s transformed into a vector and matched to stored chunks using similarity metrics like cosine similarity.

While this works for simple queries, it struggles in enterprise use cases where:

- Answers require synthesizing insights scattered across large documents

- Layered interdependent reasoning steps are required

- Queries are ambiguous, incomplete, or part of a longer conversation

Let’s explore these challenges and potential solutions.

Cross-Chunk Dependencies

Enterprise documents—technical manuals, compliance guides, knowledge bases—often spread related information across sections. Naïve RAG ignores these links, retrieving isolated chunks that lack broader context. This results in fragmented answers, requiring users to piece together information themselves.

Potential Solutions

- Hierarchical Retrieval: Stores embeddings at multiple levels, enabling broad-to-specific retrieval (e.g., from section summary to detailed paragraph). which ensures coherent and contextually rich responses. HiRAG is one such hierarchical approach.

- Structure-Aware Retrieval: Leverages document structure (e.g., paragraphs, subsections) and metadata (e.g., timestamps, authorship) to improve retrieval precision. A recent study introduced the Chunk-Interaction Graph, which maps structural, semantic, and keyword-based relationships across documents.

- Graph-Based Retrieval: Uses knowledge graphs to model relationships between entities and concepts, enabling context-aware retrieval. Techniques like GraphRAG generate structured summaries over related entities, while LightRAG efficiently captures both low-level entity details and high-level conceptual relationships.

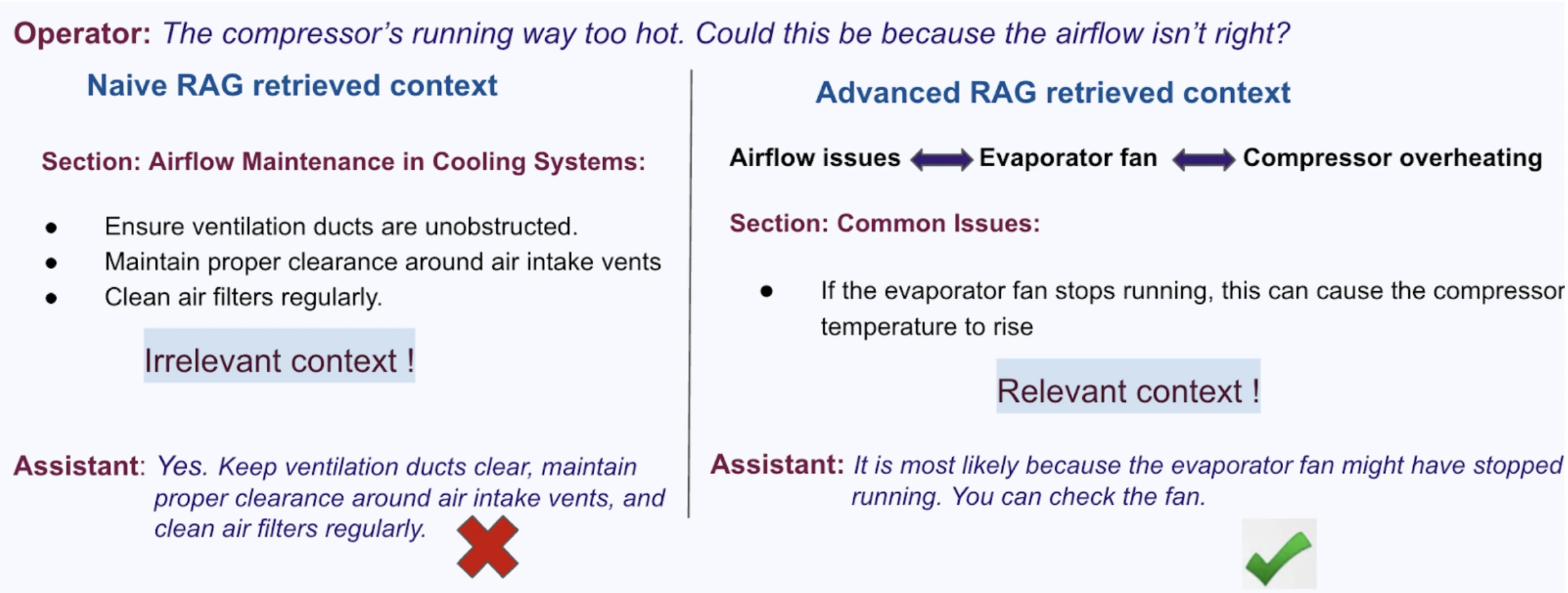

The example above illustrates the advantage of taking into account cross-chunk relationships. Here Naïve RAG cannot identify the relationship between airflow issues and compressor overheating, which is important to correctly answer the question. It simply retrieves the chunks containing information on airflow maintenance because it lacks understanding of how chunks relate. Advanced RAG, on the other hand, maintains cross-chunk relationships, and therefore it can identify the bridge between the concept of airflow issues and compressor overheating, given by the evaporator fan. This results in successful retrieval of the correct context and answering the question accurately.

Alongside these techniques, enterprise use cases offer rich opportunities to leverage domain-specific relationships — such as equipment hierarchies in field maintenance, or procedural linkages in compliance. This can enable customized retrieval systems to model cross-chunk interrelations in a way uniquely tailored to the domain.

Multi-part and Multi-hop Queries

Complex enterprise queries often require multiple interdependent reasoning steps. For example:

“What’s the maximum operating temperature of the backup generator in the facility with the highest power capacity?”

This involves: (1) finding the right facility, then (2) retrieving generator specs.

Potential Solutions

- ResP (Retrieve, Summarize, Plan): Breaks questions into sub-queries, retrieves answers, and decides if more steps are needed.

- Generate-then-Ground: Alternates between generation and retrieval for factual accuracy.

- End-to-End Beam Retrieval: Explores multiple reasoning paths in parallel to avoid missing key info.

These approaches improve reasoning, but scalable, robust multi-hop retrieval remains an evolving challenge.

Multi-turn Retrieval or Conversational Search

Users often ask follow-up questions based on earlier conversation turns. Naïve RAG systems that rely only on the latest query miss important context. On the flip side, turning the entire dialogue into one vector can dilute query intent.

Example: A technician asks, “Has this issue been reported before?” If the system doesn’t link it to prior context, it may return irrelevant results.

Potential Solutions

Most studies address this problem using one or a combination of two broad category of approaches:

- Conversational Dense Retrieval (CDR): CDR systems are trained end-to-end to fetch relevant data using both the current query and dialogue history. They often fine-tune language models like Bidirectional Encoder Representations from Transformers (BERT) to recognize relationships across dialogue turns. However, these systems can be opaque—it’s hard to understand why a certain result was returned, which can be a challenge in enterprise environments where explainability matters.

- Conversational Query Reformulation (CQR): Instead of training a complex retriever, it rewrites the user’s latest question into a self-contained query using earlier dialogue, typically through custom-tuned models or LLM prompting. It’s a flexible, integration-friendly approach but doesn’t offer the end-to-end retrieval optimization that CDR provides.

To ensure accurate, context-aware responses, retrieval must balance conversational context with precision in extracting relevant information.

Ambiguous or Incomplete Questions

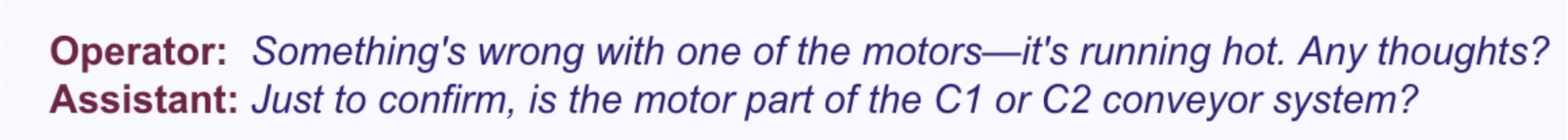

Real-world queries are often vague. Example: “Something’s wrong with one of the motors—it’s running hot. Any thoughts?” Without specifics, responses can be generic or risky.

Potential Solutions

To address this, advanced systems generate targeted clarifying or follow-up questions that help uncover missing details. Knowledge-driven methods combine LLMs with structured data like knowledge graphs that can enable an AI assistant to ask domain-specific follow-ups grounded in operational context. Other approaches use step-by-step prompting to refine queries or predict what users might ask next. Generic LLM follow-up questions, however, often miss the mark in specialized use cases.

By integrating these strategies, RAG systems can move from simple retrieval to more interactive, context-aware AI assistants.

Multi-Modal Retrieval

In addition to the above issues, user queries often demand answers that transcend textual data, such as requiring insights from diagrams, charts, tables, or schematics. Naïve RAG systems focused on text can’t handle such multimodal inputs.

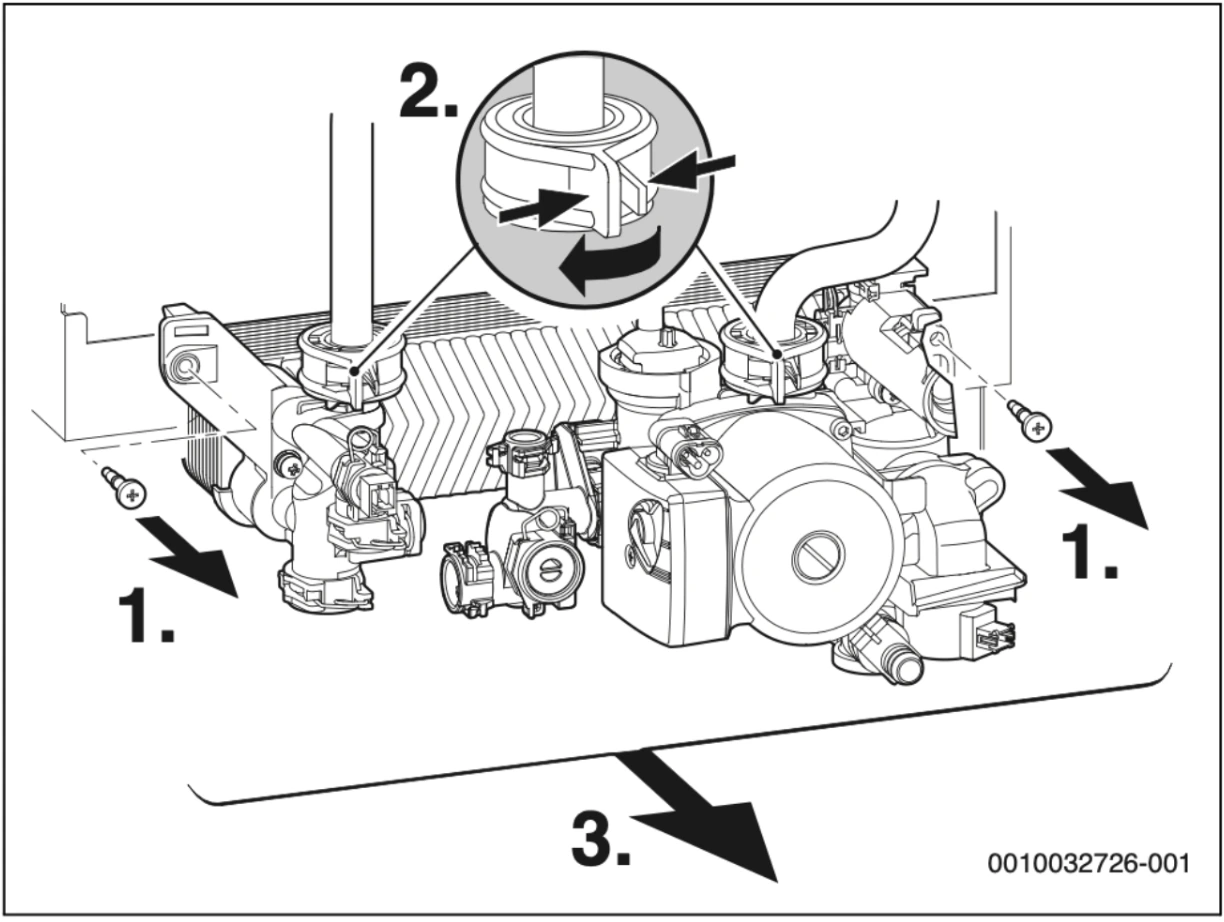

Example: A query about “removing the pump unit from the boiler” may require both an illustration and procedural text.

Figure of the pump unit of a boiler (from a Bosch manual)

Potential Solutions

Recent advancements address this with unified multimodal embedding spaces, like CLIP and MM-EMBED, that align text and images for better cross-modal retrieval. Techniques like Contrastive Localized Language-Image Pre-Training improve fine-grained understanding by linking specific image regions to text. Additionally, multimodal LLMs, such as Llama4, GPT-4o, Qwen-VL, IBM Granite Vision, etc. can generate summaries from images, which are embedded alongside text in vector databases, improving retrieval efficiency and integrating insights from both text and visuals for more comprehensive answers. These advancements enable systems to handle complex, multimodal queries with greater accuracy and relevance, while generating more complete, contextually rich answers across modalities.

In conclusion, advancing beyond naïve RAG in conversational AI for long documents requires a careful blend of domain-specific knowledge and advanced retrieval techniques. At Vivoka, we leverage our expertise in both cutting-edge research and in-house innovative retrieval solutions to tailor the most effective approaches for each unique use case. By combining the best of both worlds, we ensure our systems deliver more accurate, contextually aware, and actionable insights, empowering enterprises to navigate complex, real-world challenges with ease.