When vision meets voice: a smart eyewear perspective

Smart glasses are wearables, like VR headsets, watches, earphones and such. They embed advanced technologies that are minified to fit inside small devices. With great features, comes great complexity. Users, in order to embrace wearables, as individuals or workers, need specific methods to interact with innovation. On this road to mass adoption, smart eyewear, in all shapes, have come to the conclusion that voice is in fact, one of the many ways to control such devices.

Smart eyewear, like other smart devices are microcomputers. Being worn as equipments, users cannot treat them the same way as they would with smartphones or computers. In fact, these last are designed to be used with hands, or reached with hands. Where glasses have to be worn on the head, close to all other human senses that are not “touch-related” like vision, hearing or… voice ! But (yes we always need to confront things), today’s voice capabilities are not what we, as users, yet expect them to be like. This is what we are talking about in our fancy headline, complex, yet accessible, voice technologies inside AR smart glasses. Of course, other wearable devices can and surely will benefit from voice, but we see smart eyewear as the perfect study example. First, let’s take a look at what we are talking about…

A (quick) overview of what smart glasses are like nowadays

As we mention, Smart Glasses are not new. In fact, they have been available for a couple of years and are actively evolving. In this technical panorama, we are of course seeing tech giants (Google, Amazon, Xiaomi, Facebook…). These are mainly looking for their glasses to be worn by everyone. Among them, many different companies (Vuzix, RealWear, Magic Leap, Rokid…) have emerged. They either follow the same quest or address different areas like enterprise equipment. This blog post is not about listing all of them. Some other blogs have been referencing AR Smart Glasses much better than we could. They provide a lot of details about features and we invite you to check after reading what’s next.

What prevents smart eyewear from rising on top of the “smart devices” panorama?

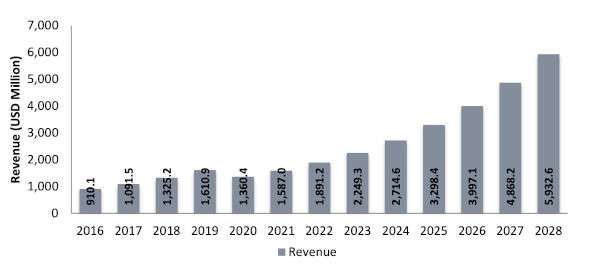

With an impressive forecast of 12,8$ billion in revenue by 2028, smart glasses are expected to reach their mass adoption… But why does it take so much time? According to the 2021 Facts & Factors Analysis, device’s expensiveness, internet connection dependency, technology’s immaturity and time commitment in the developing stage are major factors that weaken smart glasses’ perceived utility thus demand.

Facts & Factors Analysis – Global Smart Eyewear Market for Augmented Reality, Revenue (USD Million) 2016 – 2028

Adding to these criterias, device’s sustainability and user adoption in different environments (professional or personal) are also key factors that threaten smart glasses evolution. Hopefully, some industries are increasingly supporting smart eyewear, at least in a B2B context:

- Military & Defense,

- Healthcare,

- Manufacturing,

- Education

- Gaming…

For them, high-value use cases can be grasped and would be able to enhance current features and/or workflows. With obvious demand but lack of adoption, what can be done to make smart glasses one of the 21st century big revolutions?

Features capacities?

Smart Glasses are basically micro-computers that users wear on their head. Users can already have access to plethore of use cases, AR visualizations, remote communications, image recognition for instance, thanks to technologies compatibility and advances in hardwares that can support them. But all in all, voice is not that developed, not much as voice commands is considered as the standard. In fact, there are much more use that voice can bring inside such devices, yet noone ever step in this direction… what’s more?

Interactions?

Unlike computers or smartphones that are supposed to be used with hands, head-mounted smart glasses don’t come with a keyboard and mouse or touchscreen… So interactivity it is ? Touch and buttons are the first thing that comes to mind, but they have limitations by their size and position that don’t help being convenient. To be adopted and promoted, innovative devices have to be seamlessly understood and used in their deepest complexity, this is where voice is the most “mature” way today to interact with smart head-mounted displays!

Cognitive technologies to the rescue of smart eyewear lacking ergonomics

We can sort cognitive technologies into 3 feasible solutions today: gesture, eyes, and voice recognition. The first one is promising but still lacks accuracy, performance, can require additional hardware sometimes and isn’t that convenient knowing that smart glasses are supposed to be hands-free. Eyes captures is something that many are looking for in the future, the technology isn’t ready yet but really promising. Speech recognition then? Not all smart glasses are currently equipped with voice-based capacities, but most of the “big” ones have, and there’s a reason to that. Will all logic intended, smart glasses are positionned where most of human senses are, voice, visions, hearing… This is why voice has been such an interesting clue in this devices’s development and evolution. But it needs to continue stepping forward.

Disclaimer: we are not saying that voice is the only thing that is preventing smart glasses to shine. Many have thought about it before, many have already equipped their devices with it. We just want to promote voice recognition in a use case where it is not only seen as a gadget, but as a real, pertinent, benefic, way of interact with technology! And this, only with simple voice commands, imagine with all the range of solutions we have…

Who is currently providing voice in this industry?

So who’s behind the “voices” in smart eyewear? Google, Facebook, Amazon, Microsoft, Nuance (could be included in Microsoft’s now), Vivoka or custom company-specific softwares. Of course this is not 100% exhaustive, but this covers most of the market.

Why is it not “that good” yet?

First of all, speech recognition accuracy. Generalistic voice engines will be able to transcribe most of the commands and intentions but will have a hard time understanding complex vocabularies which is quite often present in professional environments. Latency as well, when working with cloud technologies that suffer internet dependency, especially critical in remote areas. The lack of language support and accents/dialects compliance are also deceptive elements for companies who want to deploy their products globally and work with many different populations. At last, we can also mention that from now on, only voice transcription and wake-up words have been used in smart glasses (and vision-based devices as well). In fact, there is a huge space left open for new use cases with the range of technologies available.

What we see as the way smart glasses should be in terms of voice assistance

Sure, having drastic innovations is great. We as tech savvys or users are always looking for crazy new features, especially in voice assistance. But is it always necessary? Most users, when using something new, are quickly disappointed by failures. So why not do less but do great? For instance, one of Vivoka’s latest clients, Vuzix, has chosen the Voice Development Kit to build embedded voice commands for navigation purposes and it works amazingly well with speed and accuracy (and 43 languages are supported…).

Embedded voice assistance for the win

Also, cloud technologies are great and their capabilities have nearly no boundaries. But, no internet, no cloud or poor internet, high latency. Mix this as you want, the problem remains. Of course, where there is a problem, lies a solution. Embedded technologies have been around for decades and often stay in the shadow of what cloud technologies can do. But embedded means no internet, no data (Privacy approves). Moreover, with latest advances in model’s sizes and capacities, embedded have serious answers to its detractors. It will also seduce a lot of companies thanks to its resilience. If we want to go for crazy features, we can surely mention voice biometrics for quick and seamless authentication and identification or text-to-speech to provide audio information. Indeed, voice is not only about giving orders to a machine, it can also bring a relationship far more “human” with it.

We are more than confident in the future of voice technologies in wearables, and especially vision-based one’s. There are a lot of natural complementarities between the various solutions and what they are specialized in. At this point, you mostly want to know what all of this can bring to smart glasses (and wearables in all sorts)? Also, you may want to know what kind of use cases we can invent and create with these different voice technologies… We suggest you subscribe to receive next week’s blog post about that specific subject (or come back next week…)!