Currently, we are in an era governed by cognitive technologies where we find for instance virtual or augmented reality, visual recognition and speech recognition!

However, even if the “Voice Generation” are the most apt to conceptualize this technology because they are born in the middle of its expansion, many people talk about it, but few really know how it works and what solutions are available.

And it is for this very reason that we propose you to discover speech recognition in detail through this article. Of course, this is just the basics to understand the field of speech technologies, other articles in our blog cover some topics in more detail.

“Strength in numbers”: the components of speech recognition

For the following explanations, we assume that “speech recognition” corresponds to a complete cycle of voice use.

Speech recognition is based on the complementarity between several technologies from the same field. To present all this, we will detail each of them chronologically, from the moment the individual speaks, until the order is carried out.

It should be noted that the technologies presented below can be used independently of each other and cover a wide range of applications. We will come back to this later.

The wake word, activate speech recognition, with voice

The first step that initiates the whole process is called the wake word. The main purpose of this first technology in the cycle is to activate the user’s voice to detect the voice command he or she wishes to perform.

Here, it is literally a matter of “waking up” the system. Although there are other ways of proceeding to trigger the listening, keeping the use of the voice throughout the cycle is, in our opinion, essential. Indeed, it allows us to propose a linear experience with voice as the only interface.

The trigger keyword inherently has several interests with respect to the design of voice assistants.

In our context, one of the main fears about speech recognition is the protection of personal data related to audio recording. With the recent appearance of the GDPR (General Data Protection Regulation), this fear regarding privacy and intimacy has been further amplified, leading to the creation of a treaty to regulate it.

This is why the trigger word is so important. By conditioning the voice recording phase with this action, as long as the trigger word has not been clearly identified, nothing is recorded theoretically. Yes, theoretically, because depending on the company’s data policy, everything is relative. To prevent this, embedded (offline) speech recognition is an alternative.

Once the activation is confirmed, only the sentences carrying the intent of the action to be performed will be recorded and analyzed to ensure the use case works.

To learn more about the Wake-up Word, we invite you to read our article on Google’s Wake-up Word and the best practices to find your own!

Speech to Text (STT), identifying and transcribing voice into text

Once speech recognition is initiated with the trigger word, it is necessary to exploit the voice. To do this, it is first essential to record and digitize it with Speech to Text technology (also known as automatic speech recognition).

During this stage, the voice is captured in sound frequencies (in the form of audio files, like music or any other noise) that can be used later.

Depending on the listening environment, sound pollution may or may not be present. In order to improve the recording of these frequencies and therefore their reliability, different treatments can be applied.

- Normalization to remove peaks and valleys in the frequencies in order to harmonize the whole.

- The removal of background noise to improve audio quality.

- The cutting of segments into phonemes (which are distinctive units within frequencies, expressed in thousandths of a second, allowing words to be distinguished from one another.

The frequencies, once recorded, can be analyzed in order to associate each phoneme with a word or a group of words to constitute a text. This step can be done in different ways, but one method in particular is the state of the art today: Machine Learning.

A sub-part of this technology is called Deep Learning: an algorithm recreating a neural network, capable of analyzing a large amount of information and building a database listing the associations between frequencies and words. Thus, each association will create a neuron that will be used to deduce new correspondences.

Therefore, the more information there is, the more precise the model is statistically speaking and capable of taking into account the general context to assign the best word according to the others already defined.

Limiting STT errors is essential to obtain the most reliable information to proceed with the next steps.

NLP (Natural Language Processing), translating human language into machine language

Once the previous steps have been completed, the textual data is sent directly to the NLP (Natural Language Processing) module. The main purpose of this technology is to analyze the sentence and extract a maximum of linguistic data.

To do this, it starts by associating tags to the words of the sentence, this is called tokenization. These are actually “tags” that are applied to each word in order to characterize it. For example, “Open” will be defined as the verb defining an action, “the” as the determinant referring to “Voice Development Kit” which is a proper noun but also a COD etc… and this for each element of the sentence.

Once these first elements have been identified, it is necessary to give meaning to the orders resulting from the speech recognition. This is why two complementary analyses are performed.

First of all, syntactic analysis aims to model the structure of the sentence. It is a question here of identifying the place of the words within the whole but also their relative position compared to the others in order to understand their relations.

To complete and finish, the semantic analysis which, once the nature and the position of the words are found, will try to understand their meaning individually but also when they are assembled in the sentence in order to translate a general intention of the user.

The importance of NLP in speech recognition lies in its ability to translate textual elements (i.e. words and sentences) into normalized orders, including meaning and intent, that can be interpreted by the associated artificial intelligence and carried out.

Artificial intelligence, a necessary ally of speech recognition

First of all, artificial intelligence, although integrated in the process of the previous technologies, is not always essential to achieve the use cases. Indeed, if we are talking about connected technologies (i.e. Cloud), AI will be useful. Especially since the complexity of some use cases, especially the information to be correlated to produce them, makes it mandatory.

For example, it is sometimes necessary to compare several pieces of information with actions to be carried out, integrations of external or internal services or databases to be consulted.

In other words, artificial intelligence is the use case itself, the concrete action that will result from the voice interface. Depending on the context of use and the nature of the order, the elements requested and the results given will be different.

Let’s take a case in point. Vivoka has created a connected motorcycle helmet that allows to use functionalities with the voice. Different uses are available, such as using GPS or music.

The request “Take me to a gas station on the way” will return a normalized command to the artificial intelligence with the user’s intention:

- Context: Vehicle fuel type, Price preference (affects distance travelled)

- External services: Call the API of the GPS solution provider

- Action to be performed: Keep the current route, add a step on the route

Here, the intelligence used by our system will submit information and a request to an external service that has a specialized intelligence to send us back the result to operate on the user.

AI is therefore a key component in many situations. However, for embedded functionalities (i.e. offline), the needs are less, being closer to the realization of simple commands such as navigation on an interface or the reporting of actions. It is a question here of having specific use cases that do not require consulting multiple information.

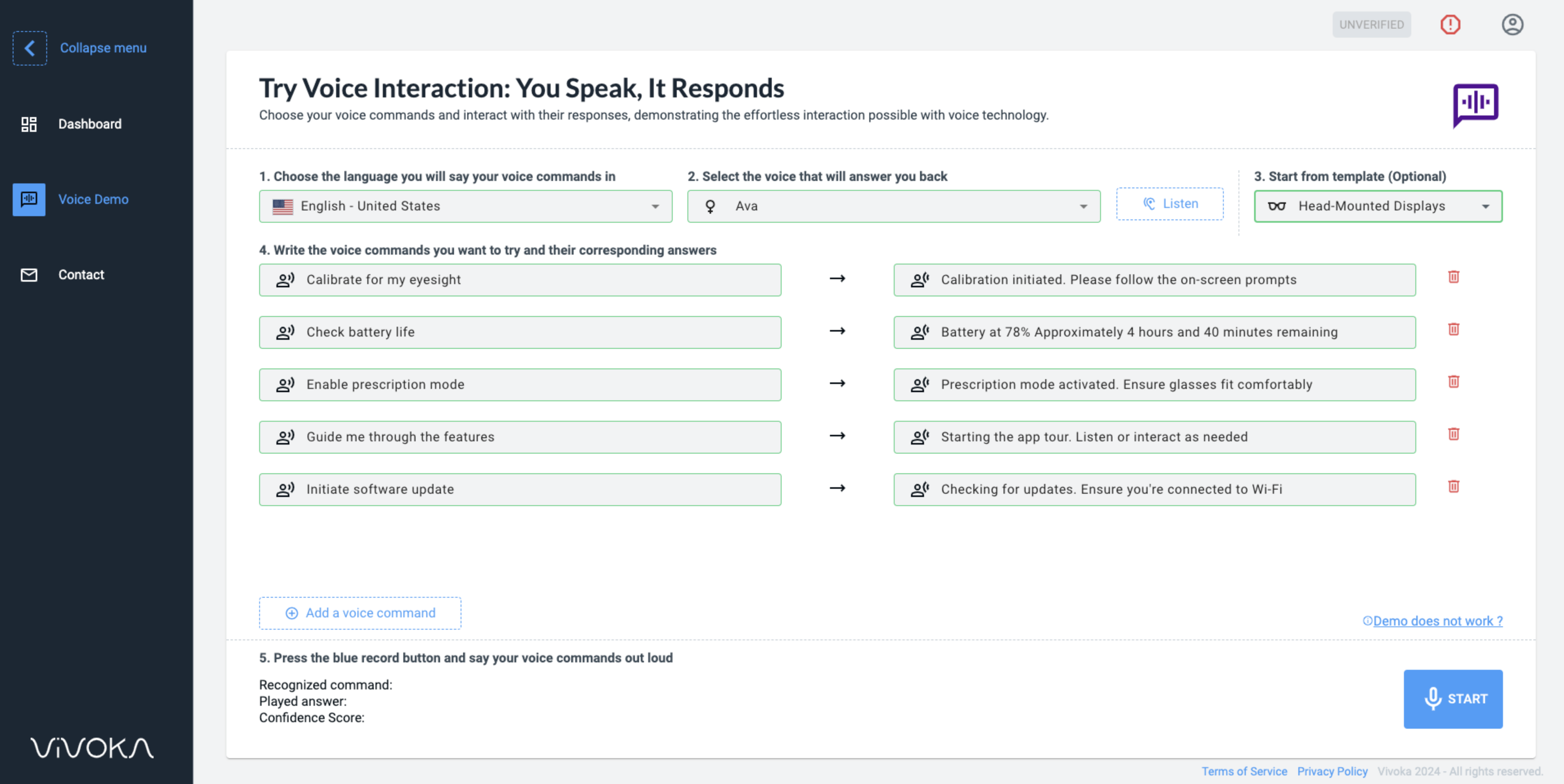

TTS (Text to Speech), voice to answer and inform the user

Finally, the TTS (Text-to-Speech) concludes the process. It corresponds to the feedback of the system which is expressed through a synthetic voice. In the same spirit as the Wake-up Word, it closes the speech recognition by answering vocally in order to keep the homogeneity of the conversational interface.

The voice synthesis is built from human voices and sounds diversified according to language, gender, age or mood. Synthetic voices are thus generated in real time to dictate words or sentences through a phonetic assembly.

This speech recognition technology is useful for communicating information to the user, a symbol of a complete human-machine interface and also of a well-designed user experience.

Similarly, it represents an important dimension of Voice Marketing because the synthesized voices can be customized to match the image of the brands that use it.

The different speech recognition solutions

The speech recognition market is a fast-moving environment. As use cases are constantly being born and reinvented with technological progress, the adoption of speech solutions is driving innovation and attracting many players.

Today on the market, we can count major categories of uses related to speech recognition. Among them, we can mention :

Voice assistants

We find the GAFAs and their multi-support virtual assistants (smart speaker, telephone, etc.) but also initiatives from other companies. The personalization of voice assistants is a trend on the fringe of the market domination by GAFA, where brands want to regain their technical governance.

For example, KSH and its connected motorcycle helmet are among those players with specific needs, both marketing and functional.

Professional voice interfaces

We are talking about productivity tools for employees. One of the fastest growing sectors is the supply chain with the pick-by-voice. This is a voice device that allows operators to use speech recognition to work more efficiently and safely (hands-free, concentration…). The voice commands are similar to reports of actions and confirmations of operations performed.

There are many possibilities for companies to gain in productivity. Some use cases already exist and others will be created.

Speech recognition software

Voice dictation, for example, is a tool that is already used by thousands of individuals, personally or professionally (DS Avocats for instance). It allows you to dictate text (whether emails or reports) at a rate of 180 words per minute, whereas manual input is on average 60 words per minute. The tool brings productivity and comfort to document creation through a voice transcription engine adapted to dictation.

Connected objects (Internet of Things IoT)

The IoT world is also fond of voice innovations. This often concerns navigation or device use functionalities. Whether it is home automation equipment or more specialized products such as connected mirrors, speech recognition promises great prospects.

As the more experienced among you will have understood, this article explains in a succinct and introductory way a complex technology and uses. Similarly, the tools we have presented are a specific design of speech technologies, not the norm, although they are the most common designs.

To learn more about speech recognition and its capabilities, we recommend you browse our blog for more information or contact us directly to discuss the matter!

How Speech Recognition is Shaping the Future of Technology

How Voice Recognition is Transforming Technology and Enhancing User Experiences

Voice recognition is now at the heart of a technological revolution, affecting a wide range of systems and devices, from personal assistants to complex enterprise applications. Thanks to major advances in machine learning models and natural language processing, companies like Vivoka have developed software capable of transforming voice into text with unparalleled accuracy and speed. Voice transcription and dictation offer unprecedented access to intuitive and personalized commands, allowing for more natural control of devices. Voice recognition technologies are becoming essential for improving accessibility and efficiency, offering a seamless user experience. With applications ranging from simple voice commands to sophisticated speaker recognition systems, this technology continues to push the boundaries of what our human-machine interactions can achieve.

Our voice recognition technology can be seamlessly integrated into everyday devices, enhancing user interaction across various platforms. The incorporation of voice-driven commands into mobile phones, computers, and smart home devices exemplifies the practicality of speech systems in everyday life. This evolution not only simplifies tasks but also enriches the user experience by enabling more personalized interactions through language models that understand context and user preferences. Furthermore, advancements in audio processing and model training techniques continue to refine the accuracy and responsiveness of these systems, making voice recognition an increasingly indispensable part of modern technology landscapes. This shift towards voice-enabled environments highlights the transformative potential of speech recognition in both personal and professional settings.

Enhancing Accessibility and Inclusivity Through Voice Recognition Technology

Moreover, the expansion of voice recognition capabilities is also opening new avenues for accessibility and inclusivity. By providing easier access to technology for individuals with physical or visual impairments, voice commands are becoming a vital tool in breaking down barriers. The adaptability of voice systems allows them to cater to a diverse range of languages and dialects, further broadening their impact. This inclusivity extends to educational environments and workplaces, where voice technology can offer alternative methods for interaction and engagement. As the technology continues to develop, it promises to enrich lives by making digital services more accessible and user-friendly for all segments of the population, thereby fostering a more inclusive digital world.

In addition to enhancing accessibility, voice recognition technology is also revolutionizing the business landscape by streamlining operations and facilitating smoother communication. Companies are leveraging these systems to automate customer service interactions, reducing response times and increasing satisfaction. Voice-driven analytics tools are enabling businesses to gain insights from customer interactions, helping to refine strategies and improve services. Moreover, the integration of voice commands in the workplace enhances productivity by allowing employees to perform tasks hands-free, thereby increasing efficiency. As industries continue to adopt voice technology, it is poised to transform traditional business models, offering innovative ways to interact with customers and manage operations more effectively.

How Voice Recognition Merges with AI for Enhanced Security and Intuitive Interactions

Furthermore, the continuous refinement of voice recognition technology is leading to more sophisticated applications in security and authentication. Voice biometrics are increasingly being used as a reliable method for verifying identities, offering a seamless yet secure alternative to traditional passwords and PINs. This technology is being integrated into banking, secure access to devices, and personalized user experiences, where voice patterns are as unique as fingerprints. The enhanced security protocols, combined with the convenience of voice commands, are setting new standards in both personal security and corporate data protection. As we move forward, the potential of voice recognition to bolster security measures while maintaining user convenience is likely to see even greater adoption across various sectors.

The proliferation of voice recognition technology is not just reshaping user interactions and security; it is also driving innovations in artificial intelligence. As these systems become more integrated with AI, they are learning to interpret not just words, but nuances of emotion and intent, enabling more empathetic and contextually aware interactions. This advancement heralds a new era of AI that can adapt to and predict user needs more effectively, creating a proactive rather than reactive technology landscape. As AI continues to evolve alongside voice recognition, the potential for truly intuitive digital assistants that anticipate and understand user preferences will further transform our interaction with technology, making it more natural and aligned with human behavior.

The Transformative Impact of Voice Recognition in Healthcare and Education

This ongoing evolution in voice recognition and AI is also fostering significant changes in healthcare, where it has the potential to revolutionize patient care. Voice-driven applications can facilitate more accurate patient documentation, reduce administrative burdens on healthcare providers, and enhance patient engagement by allowing for voice-activated health tracking and management tools. This technology offers a hands-free method to access vital information, making medical environments safer and more efficient. Additionally, speech recognition can support remote patient monitoring systems, providing healthcare professionals with real-time updates on patient conditions. As this technology becomes more sophisticated, its integration into the healthcare system promises to improve outcomes, increase accessibility, and personalize patient care like never before

Beyond healthcare, voice recognition is also making significant inroads in the educational sector. It transforms traditional learning environments by facilitating interactive and accessible educational experiences. Students can engage with learning materials through voice commands, making educational content more accessible to those with disabilities or learning difficulties. Moreover, language learning is particularly enhanced by speech recognition technologies, as they allow for real-time pronunciation feedback and interactive language practice, simulating natural conversational environments. As educational tools continue to integrate voice technology, we see a shift towards more inclusive and personalized learning paths that cater to a diverse student population, ultimately fostering a more engaging and effective educational experience.

How Voice Recognition Transforms Public Services and Urban Mobility

Additionally, the integration of voice recognition into public services and infrastructure is streamlining processes and enhancing public interaction with government entities. This technology enables citizens to access information and services through simple voice commands, reducing the complexity and time required for traditional bureaucratic processes. From renewing licenses to scheduling appointments and accessing public records, voice-enabled interfaces are making government services more user-friendly and accessible to all, including those with limited mobility or tech-savviness. This adoption not only improves efficiency and reduces administrative overhead but also promotes transparency and accessibility, making public services more accountable and responsive to citizen needs.

The scalability of voice recognition technology also paves the way for its application in larger-scale systems, such as transportation and urban planning. Smart cities around the world are beginning to employ voice-activated systems to improve public transportation, manage traffic flow, and enhance public safety features. Commuters can interact with transit systems through voice commands to find route information, schedule updates, and ticketing options, all hands-free. This integration not only enhances the commuter experience but also contributes to the development of smarter, more responsive urban environments. As cities continue to grow and seek efficient solutions to complex logistical challenges, voice recognition technology stands as a key tool in the development of sustainable and intelligent urban ecosystems.

In the realm of entertainment and media, voice recognition technology is reshaping how consumers interact with content. Voice-activated systems are becoming integral to home entertainment setups, allowing users to search for movies, play music, and control smart home devices with simple spoken commands. This hands-free control is particularly advantageous during activities such as cooking or exercising, where manual interaction with devices is inconvenient. Additionally, gaming industries are adopting voice commands to provide more immersive experiences, allowing players to interact with the game environment and characters in innovative ways. As voice recognition technology continues to advance, it’s set to further personalize and enhance the entertainment experience, making it more interactive and accessible than ever before.