Did you know that voice privacy is an unavoidable outcome of our digital world? It represents the right of individuals to have control over how companies collect and use their personal information through speech-enabled interfaces. Indeed, our expected journey toward a voice-first world means human speech would become a major medium in man-machine interactions.

Who has never hear about stolen data from users, strangely (too) well-targeted ads, unwanted recordings…? In recent years, major companies have already done some goofs with user’s data. We have some examples to share later on!

While users endeavor privacy, companies try their best to deal with it, at least for the minimum required. In this paradigm, voice privacy has become an important thing to care for. Stick with your reading, we present to you a clear mapping of what’s really behind it.

Why is voice privacy existing?

Specific Data are involved (biometric data)

As its name suggests, voice privacy focuses on the data that human speech contains. But there’s a twist. These are not mere data. It falls into the category of biometric data: information that is unique for each individual. In the field of AI, we call those characteristics a “Voice Print”, the identity of a voice, like a fingerprint. For the tech savvies around here, it’s also related to spectrograms, the representation of an audio signal.

It’s all because of prosody, the incredible number or parameters that merge into one’s voice and make it sound as it sounds. Intonation, loudness, tempo, rhythmicality or tension are some key factors of prosody. There are also socio-cultural specificities such as accents for instance. Depending on the mix, it can tell a lot about who you are.

Since we know that voice is something that is genuine to everyone, it naturally falls into the category of personal data. These have a more regulated way to be dealt with. But that’s not all…

Workflows and behaviors are identified (user experience)

It’s not only about what voice contains in the sense of a biometric data, it is also about what it conveys. Voice privacy targets the behavioral data and the user journeys that voice inputs and commands can identify. Although you could consider it as improvement methods, inetnt recognition and workflow analysis can easily determine user’s facts and figures.

Therefore, companies could use it “against” people, in order to manipulate these datas and get a clear picture of their thinking process. This could offer sensitive information to malicious enterprises which may use it to just trigger buying behaviors or else.

Indeed, usually different technologies compose voice assistants:

- A wake word to get ready to listen to what the user is going to say;

- ASR in order to understand voice commands and basic information;

- NLU which allows it to better understand the context by identifying the user’s sentiment in input text and determining their objectives.

It is to protect both the identity and the behavioral data of someone that voice privacy was born. But from what is it protecting them?

What are the threats behind the lack of voice privacy ?

Dealing with voice privacy: consumer’s POV

Consumers are the most vulnerable to voice privacy concerns because they often are not really aware of it nor they know exactly what it can result in.

We are being recorded! : Opaque voice data collection (conversation)

How many devices do we speak to on a daily basis? More than we can think of. How many of them are only listening when we are using them? Maybe more than we can think of too…

Thanks to the Internet of Things (IoT), most devices are equipped with a microphone are using voice AI, some who don’t keep the ability to at some point. Today’s most mentioned voice data collection methods are modern, consumer, voice assistants from GAFAMs. We could tell that they have this reputation just because they emboy evil AIs from big tech players, but there have been some oops in the process.

Couple years ago, Samsung issued a personal privacy warning for its Smart TV users. The tech giant warned its customers to avoid discussing personal information in front of their TV, not sure about the amount of information that could be recorded and shared with Samsung and third-parties…

With a quick research on the web we can see that most big tech brands have their own history of questionable voice data collection methods…

Although. While all the world’s attention is aimed toward those “evil” voice assistants, voice data collection has risen when AI was built onto anything. Beginning with call centers. So it’s not only a GAFAM thing, it came from anybody at the time.

The main use of it was and still is labeled as “offering a better user experience and an increased satisfaction”. Indeed, data is crucial when enhancing voice assistants capabilities, but it’s also important to know the customers… right?

User discrimination, targeting and advertising

As we mentioned, voice can tell a lot about someone, from mood and emotion to inferred data such as age, gender, ethnicity, socio-economic status, health conditions and so on.

For instance, during COVID-19 lockdown, Telefónica used an age-recognition tool to prioritize customers aged 65 and over.

All the information that a user’s voice contains is helping companies to build profiles. What are they using it for? There are some honorable uses like Telefónica’s but… Most of the time, advertising it is!

If you are a big tech player, advertising is one hell of a way to generate income. The more it is profitable to their customers, the more it is for them as well so they really want ads to perform. The goal is then to provide the best insights, infused with voice data, to accurately target specific audiences.

On this specific topic, thoughts are diverging. Some would say that having better ads is worth the cost of accepting that companies collect their data. Others will strictly protect their data at all cost and despise this process.

Disclaimer: this is not a conspiracy theory! In the course to better fine-tune advertising, Spywares were born. What are those?! Applications that are used to passively record a person’s conversation to learn more about their desires, projects, needs etc… So sometimes, it seems that ads are really well targeted since you talked about it moments ago.

→ But don’t fall into madness yet, there are other factors that do not include recording but explain how such precise targeting is possible!

Scams and identity thefts

While companies are collecting data and voice data, it needs to be transferred somewhere to be processed. Welcome to the vast realm of big data. This is where it can become quite dangerous.

With large amounts of data being shared, some can be lost… or stolen. Someone willing to use it for different purposes can easily find data location. With the advances of AI voices, cloning someone’s voice with some samples is not that hard anymore and it could become increasingly easier in the future. While most AI voices are used for entertainment, other applications could include scams and identity theft. That’s the case of the deepfakes for examlple.

Less elaborated scams use voice as well, voice phishing (or vishing). Some of you may remember the Can You Hear Me? scam that was used to record and collect “yes” from different people. These voice inputs would be later used to confirm payments.

Dealing with voice privacy : companies’ POV

Be aware of strategic data leaks!

Every company collects datas. In the current society, businesses need these in order to analyze and adapt their offer, personalize their services and be competitive. Not only do they need to respect data privacy with the content they collect, what they save and how much time they can keep it… But they also need to fight against fraud and data stealing from other companies (whether they are direct competitors or specialized hackers who want to re-sell it).

For example, Zoom, the remote collaboration platform which became widely used during the lockdowns, claimed it used an end-to-end encryption. But instead of that, the encryption they used wasn’t that secured because the employees had access to encrypted video & audio content. In fact, the encryption Zoom used only prevented the data to be seen from external people trying to access these information, but it wasn’t totally encrypted and thus risks remained. Moreover, Zoom had been running a data-mining feature, which enabled some participants to view other users’ LinkedIn profile data without their consent or notification.

These are not the only privacy concerns Zoom has been facing. Now, even if the company claims it wants to let people know what it is doing for their privacy, the bad is done.

Besides the fact employees can have access to users’ data and the company may be selling it to other social media for whatever reason, these could also fall into criminal hands. More than recordings of live streams where people won’t act naturally because they are suspicious about being heard, some voice-enabled devices have an always-on microphone. It means that “accidental” recordings of people speaking naturally, whether they are at work with their colleagues or at home with their family, may occur.

Compliance for customers → A company need to match its customers needs, otherwise it’s deal-breaking

Having consumer obligations to prevent data problems

The road to Hell is paved with good intentions. So even if it is in order to create a better customer experience in the beginning, don’t rush any new features which involve personal datas such as a voice assistant or any AI based system. You may end up sued for privacy violation, just like McDonald’s or Google and Amazon. And you don’t want that.

Make sure you align your vision with legal requirements (obviously), but also with what your customers could expect from you.

Companies are facing growing challenges regarding privacy, with new regulations from global leaders such as the European Union’s GDPR. This one aims to protect EU citizens’ personal data by implementing strict rules. Personal data obviously includes voice as it is a biometric data source. For the record, violation of the GDPR rules can result in fines of up to 20 million euros ($23 million U.S.).

Already existing methods to fight back voice privacy issues

Obfuscation

Obfuscation is a method of protection of voice data. Simple tools exist that change some variables of a voice while speaking and would do the job. Others, based on speech synthesis, enable people to change their voice by transcribing their speech in order to “re-say” it with a completely different voice (speech-to-text-to-speech).

For example, when the hacktivist collective Anonymous shares a promotional video where a masked member is speaking, they use an obfuscation tool in order to change their voice and make sure nobody can recognise or identify them.

Encryption

Encryption can be of two natures:

- The anonymisation of speech, through deleting or replacing sensitive content from a speech or replacing the sensitive words before they are saved so that the personal information aren’t available;

- Voice anonymisation which involves another software able to change some of the voice parameters in the signal to make it sound different.

R&D collaborations

Just like it exists for security breaches (hackathons), worldwide research challenges have emerged in order to detect voice privacy concerns and help find solutions to prevent or correct them. One of the most known international challenges is the voice privacy challenge. The first edition of this was in 2020 during the INTERSPEECH. It aims to accelerate the development of new anonymisation solutions “which suppress personally identifiable information recordings of speech may contain while preserving linguistic content and speech quality/naturalness.” https://www.voiceprivacychallenge.org/

Legal Initiatives

GDPR (General Data Privacy Regulation)

What is it?

GDPR became an active law on May 25, 2018. It therefore implemented some very precise rules in order to ensure EU residents’ private data protection. It allows them to keep their hands on their own personal data. These regulations also affect some voice’s as they can be biometric datas.

The GDPR include:

- The right to know what data you are keeping;

- The right to rectification;

- The right to erasure.

In order to “pass the test”, voice assistants must first get an opt-in from users as it is stated that GDPR requires data controllers and processors to get consent. As an example, since this law exist, GAFAMs have stopped multiple activities in Europe:

- Google suspended its transcriptions of recordings in Europe and now asks for opt-in through email;

- Apple apologized for voluntary data leaks, suspended its Siri voice grading program and plan to ask for opt-in to store voice recordings when they launch it back;

- Amazon had to remove its arbitration clause which allowed them to collect voice recordings from Alexa users and now offers the option to delete voice recordings through the Alexa app.

Some examples of GDPR applications

It’s also important to note that the GDPR has already had real implications for voice assistants especially. In 2019, a privacy watchdog in Germany forced Google to stop the human review of audio snippets after a leak of those snippets from the Google Assistant. When a contractor handed over recordings to a member of the Belgian media, the site could identify individuals in the clips and learn of very personal information, like medical conditions and addresses.

The Irish data protection body also reported a breach notification in July 2019, saying Google suspended processing while it examined more Google Assistant data breaches.

In other words, if your voice assistant will be available to European residents, then you must ensure you’re meeting GDPR requirements.

In addition to the GDPR, the European Digital Radio Alliance (EDRA) and the Association of European Radios (AER) requested policymakers to apply the Digital Markets Act (DMA) regulation to voice assistants too. Officially voted and approved in July 2022, this one is mainly directed to GAFAMs and big companies because it aims to limit their hold over the internet and virtual space. It should be effective in 2023.

CCPA (California Consumer Privacy Act)

After January 1, 2020, California consumers will have the following new rights pertaining to their personal data collected and managed by companies.

- The right to know what personal information is collected, used, shared or sold, both as to the categories and specific pieces of personal information

- The right to delete personal information held by businesses and by extension, a business’s service provider

- The right to opt-out of the sale of personal information. Consumers are able to direct a business that sells personal information to stop selling that information. Children under the age of 16 must provide opt-in consent, with a parent or guardian consenting for children under 13

- The right to non-discrimination in terms of price or service when a consumer exercises a privacy right under CCPA.

Effectively, the above provisions of the CCPA cover all major components of your personal data such as:

- Your name

- Your username

- Your password

- Your phone number

- Your physical address

- Your IP addresses

- Your device identifiers

APPI (Act on the Protection of Personal Information)

The Act on the Protection of Personal Information has taken effect on the 1st april 2022. This is a Japanese amendment which aims to protect its citizens’ privacy by supervising businesses that acquire personal information to the end of supplying goods and services to individuals in Japan. Just like the GDPR doesn’t end with the European Union’s businesses, the APPI doesn’t only concern Japanese businesses. It applies to all of those dealing with Japanese individuals’ data. As the GDPR, the APPI defines data as biometric information or as any “individual identification code”, composed by characters, letters, numbers, symbols, or any other which would allow the identification of a person. Therefore, they also consider voice as a personal data that needs to be prevented from overuse by huge companies that take personal advantage of it.

Some of the actors manage to find agreements in order to ease the process, like the EU and Japan who published a joint statement mentioning that they recognize each other as having adequate levels of personal data protection. Indeed, their regulation on the subject being similar, they “white listed” each other.

Final words on privacy

As you can see, privacy has become an important subject these recent years. Every actor is taking actions to protect it on their own level. Indeed, there isn’t a world privacy regulation to this end. From then on, it will soon become hard for businesses to collect personal data and keep being compliant with every regulation. Not to mention that the internet reins are kept by Google, one of the GAFAMs, which tries more or less to be compliant with EU rules (needless to remind they failed sometimes).

An example is the little pop-ups that appear when you browse a website: according to Google, pop-ups will soon be very likely to be punished because it is intrusive. Then, what do we do if the sole proven method to actually get opt-in for cookies is intrusive itself? Moreover, with the difficulties authorities have regarding proceedings of such giants, it is clear that we cannot control everything, everywhere, all at once.

From then on, wouldn’t it be easier to not have humans processing nor accessing any data in the first place? There is a world where all data processing happens on the device. It would mean no data processing when there is no need for it. No personal information exploitation without consent. Less hassle for small and medium-sized enterprises. Therefore, it would mean embedded voice technology, directly integrated to the product or interface.

Vivoka’s advice: Keeping datas close to the device so they won’t fly toward the Cloud

What is embedded voice AI?

Embedded implies that the system works on the device so it needs smaller models because it won’t need to process data any further than the device itself. That’s also why it is related to the field of edge computing but with an additional security layer. It is then able to perform end-to-end voice experiences that work faster and without the need for collecting data.

What are the benefits of using it?

There are many benefits of using embedded technology, especially nowadays as there are more and more laws which aim to protect privacy.

- Everything is processed directly as we mentioned before. There is no data transfer, unless wanted. It means that users stay unique owners of their datas and the supplier doesn’t need to worry about how they will care for the datas as they don’t have access to them in the first place. Indeed, data management is a real struggle that companies need to take into account or else it may cause severe penalties from authorities.

- It works with no latency. It doesn’t need to be processed anywhere else than on the device so doesn’t rely on any network thus runs real-time.

- As it works offline, users can benefit from it every time, everywhere, whether there is wifi/mobile network or not.

- It also lowers the footprint of the technology as it works on battery powered devices for years.

- That is a cost effective way of implementing AI voice as it doesn’t rely on pay-as-you-go models like cloud solutions may offer.

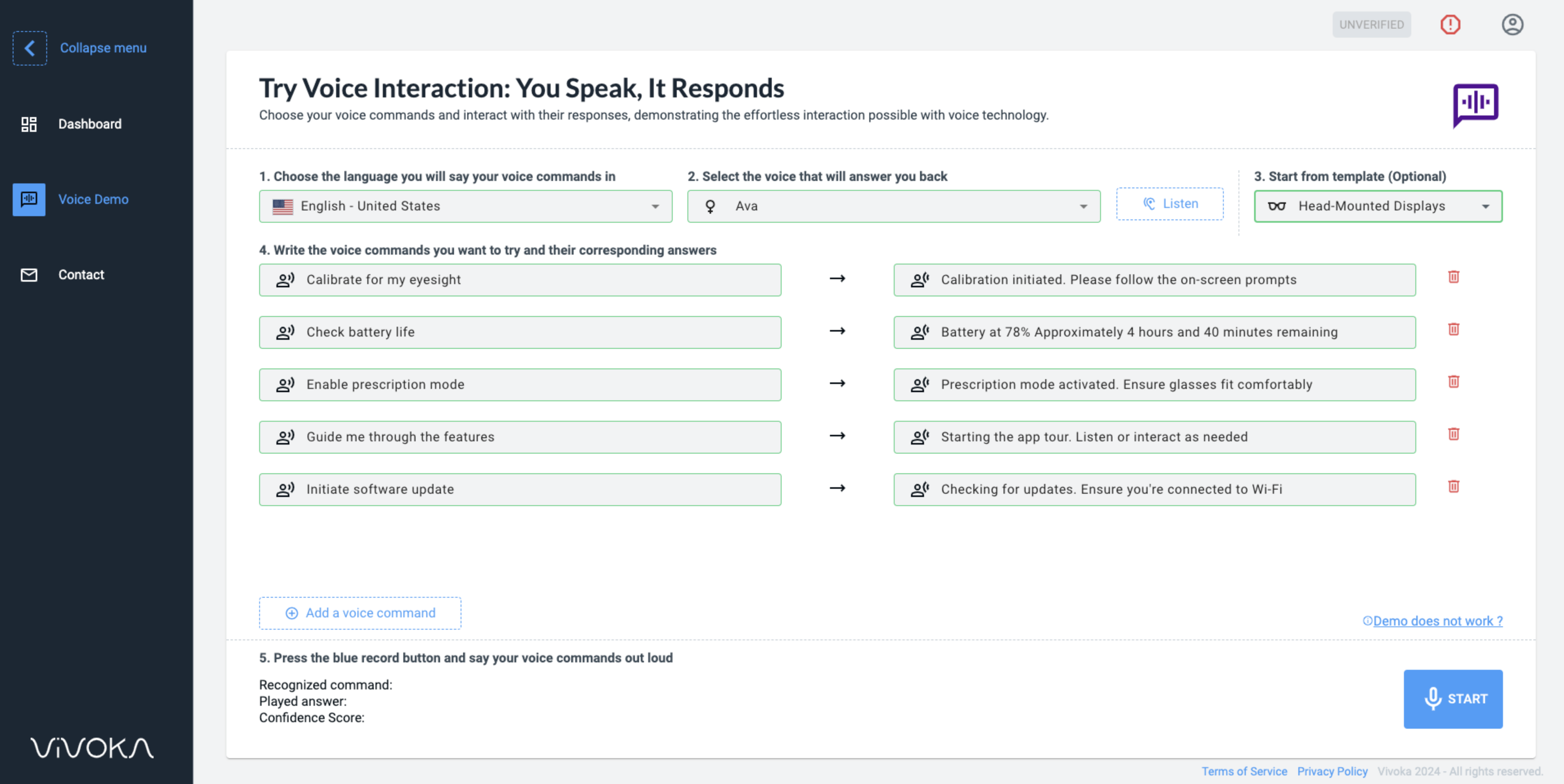

Specialized in offline voice AI, Vivoka provides the VDK:

A complete development solution to help you implement voice technology to your products or services and leverage it anywhere and anytime. Allowing you to develop completely on-device solutions. It means that it prevents your customers’ data from being processed on the cloud. Moreover, as opposed to most cloud solutions, we are flexible and adjust to your business model. Whether you want to test or just discuss your project, feel free to contact us!

On-premise/hybrid computing

Cloud computing can be leveraged while speech processing is device-based, we have another article on this topic to help you make a choice.

Voice privacy in a nutshell: Conclusion

In an increasingly voicefirst world, there is currently not much to do in order to preserve our voice privacy. Apart from not using voice assistants anymore and avoiding calls with companies that use voice analysis in order to limit how much your voice is recorded and, thus, the amount of voice datas processed… Indeed, even though solutions are being developed, the techniques are still far from being usable in everyday life and individually. Most often, when they are, it’s that a company has implemented tools to protect its customers’ privacy. From then on, it is up to them to take initiatives to do so, otherwise it won’t move forward.

That’s also why bigger organizations such as the European Union or Governments are beginning to put in place legal obligations. To incentivize companies to update their privacy policies in accordance with most recent customers’ and citizens’ expectations on privacy. For now the European Committee for Data Protection is one of the strictest organizations. Yet, despite its relative efficiency in discouraging fraudulent misuses of personal datas, it still faces some difficulties. Indeed, it still struggles to act quickly on complaints against GAFAMs or any Big Tech company.

Data privacy is a subject that concerns us all, whether you are at the head of a leading Tech company or an employee in a SME. It is a great thing that authorities help build awareness around it and enforce companies respect that, regardless of the data involved.