What is Natural Language Processing?

Natural Language Processing (NLP) is at the intersection between artificial intelligence and linguistics. It is the technology allowing computers to imitate human behaviour regarding communication (speaking, listening, reading, understanding, etc.). While computers would misunderstand human sentences, Natural Language Processing takes place to add a sense (or meaning) to words and sentences. This allows computers to communicate in a more relevant way with users, so it unlocks several applications, such as conversational AIs.

Natural Language Processing Levels

The computer must be able to understand the statements, and interpret them as a human would. Years of research in the domain have made it possible to develop a general processing scheme. It includes several steps that need to be followed in the right order to be able to accurately interpret human language. Indeed, just as humans need to wait until the end of the sentence in order to understand it within the context, the computer will need to determine the beginning and the end of a sentence, before starting to analyse the different words inside and then only process the global meaning of the sentence according to the context. This allows the machine to break down the textual/speech elements in order to extract their meaning. Traditionally, there are several processing levels inside NLPNatural Language Processing, we are going to dive into the main ones.

Lexical Processing

The lexical processing consists of analysing the structure of a sentence based on surface (what we see) rules, such as capital letters which imply the beginning of a sentence or a proper noun, the dot at the end of sentence and so on… by using the “bag-of-words” method. The process that makes it possible for the machine to distinguish the words or group of words and their nature in a sentence is called “tokenization”.

It consists in labelling each token with “tags” to identify it in one of the different grammatical categories:

- Pronoun in the singular;

- Verb conjugated to the present;

- Singular pronoun;

- DOC;

- … and so on for each element of the sentence.

At the end of this step, we have all the elements to characterise each of the words according to their nature and role as a whole.

Let’s take an example. If the input is: “The woman wears Nike sneakers.”

Then we should have as output:

- “The” = definite article that is starting the sentence (recognized thanks to the capital letter)

- “woman” = common noun;

- “wears” = verb conjugated to the present;

- “Nike” = proper noun (recognized thanks to the capital letter);

- “sneakers” = common noun, ending the sentence with the dot.

Syntactic Level

This level deals with the grammar. Indeed, it is necessary to determine what constitutes the phrase and if the elements are grammatically correct, in order to be able to understand the meaning.

With this decomposition, each group of words of the sentence is thus isolated and identified. The validation of the syntax is possible by defining in advance the different structures it can adopt. This is something difficult to define because of the many possible ambiguities. For example: “I want to eat and sleep the whole day” → eat the whole day, sleep the whole day, or both?

In the previous example, the output should validate the group of words and the fact that it is grammatically correct:

- The fact that “the woman wears sneakers” and not the opposite is correct;

- Nike is the brand of the sneakers that she is wearing.

Semantic Level

It completes the lexical and syntactic analyses that provided it with an interpretable sentence. This is the level which deals with the semantic: this is where the computer gives meaning and context to the sentence to understand it as a whole. The output is a meaning modelization. This is the part handled by Natural Language Understanding, we suggest you read our article on the subject in order to have a precise vision over it.

Each level of Natural Language Processing is dependent on the previous one. This means that a system couldn’t correctly understand the semantic without having validated the syntactic level and the lexical processing beforehand.

Natural Language Generation

Natural Language Generation allows to produce natural written or spoken outputs. It is a subset of NLP in the sense that it is able to speak (or write, you get it), but not to read or understand. Thus, it needs the previous steps of Natural Language Processing, that analyses the sentences and understands the meaning, intents and entities: Natural Language Understanding. Natural Language Generation is handled by voice synthesis, or text-to-speech in the VDK’s stack of technologies.

Examples of current applications for Natural Language Processing

Spam detection

Another common example that you may not suspect is email filters. The ones that save your email box from thousands of undesired or dangerous messages. This is called a Bayesian filter as it uses Bayesian logic to evaluate the header and content of an email and determine the probability for it to constitute spam. It crawls content and compares it to content often present in spam or junk mail. An everyday example, yet not everybody is aware of that.

Know that it is not only used for spam messages! For example, Gmail has a classification system that sorts emails according to the subject of the content (social, promotions and so on).

Sentiment Analysis

Sentiment analysis is a Natural Language Processing based workflow used to determine whether a feedback is positive or not. This technology enables email analysis or for social listening and helps leverage textual data such as customers’ insights or trends to know which aspects are worth putting forward and which are the features or aspects that deserve attention or improvements.

Search Engine Results

The “RankBrain” from Google is an algorithm whose mission is to understand user search requests and analyse how they react to these results. Natural Language Processing is part of that and allows Google to figure out what people mean. For example, when somebody searches for “the black console developed by Microsoft”, the first result is the last Xbox.

Some years ago, RankBrain used to crawl websites in order to find the keyword(s) the user was searching for and display it as one of the results on the results page.

Nowadays, Google’s algorithm uses Natural Language Processing as one of the components to show pages that may correspond to the search request. After that, it checks if it did, by observing the click-through-rate, the dwell time and the bounce rate (and plenty of other indicators).

Just like when you are looking for a word or an expression in a dictionary. You can either search for its letters one after another to compose the word you are looking for or search for a meaning. Who has never been looking for a specific word that they had forgotten by typing the meaning of it?

Language Translation

Language translation is a popular example for most people. In fact, it is probably one of the first applications of Natural Language Processing in history as Georgetown and IBM presented their first NLP translation machine in the 1950’s. At the time, it was able to translate about sixty sentences from Russian to English with a combination of linguistics, statistics and language rules. Natural Language Processing is important here in order to interpret precisely a message AND determine the equivalence in foreign language with similar particularities.

However, even if it is one of the most obvious examples in people’s minds, it isn’t the easiest application for the technology. As a matter of fact, it needs to perfectly understand the context in order to give a correct translation. Nowadays translation machines don’t translate word for word anymore. It makes the translation way more accurate but requires a lot of processing.

Chatbots & Smart Assistants

NLP-based chatbots allow for more accurate responses thanks to the interpretation of the intention of the users and based on artificial intelligence to determine the most appropriate response. The same occurs for smart assistants such as Siri, Alexa or Google Assistant. These ones have become true assistants capable of creating and editing shopping lists, buying something for you and so on. We advise you to read our blog post about voice user experience to have an overview of the best practices in the design of a smart assistant!

Limitations

Natural Language Processing is a powerful solution but it is not without limits. As you can imagine, there are several things making it difficult for a machine to perfectly understand and speak natural language.

Ambiguity

Ambiguity of the human language can be hard to understand, sometimes even for human-beings. NLP systems can have difficulty distinguishing the multiple meanings of a same word or phrase, like with homonyms for example.

Moreover, these systems still aren’t able to get all the fineness of language. For example, when we use sarcasm or irony to express the opposite of what we are saying. Henceforth, it becomes more complex for a computer to understand that a “positive” word can be used to express a negative sentiment in some context.

Domain-specific jargon

Depending on our culture or on our work area, we all use different expressions, specific jargon to communicate. In order to really understand the natural language, machines need to understand the commonly used words as well as the more specific ones. This is possible thanks to the regular training and updating of the custom models. For the domain-specific jargon, it is also possible to rely on specially built models depending on the niche.

Low-resource languages

Low-resource languages designate the languages that have less data available to train the AI systems. European and Western languages are high-resources, but there are several ones which have really little data. All the African languages or some Eastern Europe language such as Belarusian for example. This makes it more difficult to train the models to understand these languages properly. Even with the help of new techniques such as multilingual transformers and multilingual sentence embedding which is supposed to identify universal similarities in the different existing languages.

Final thoughts

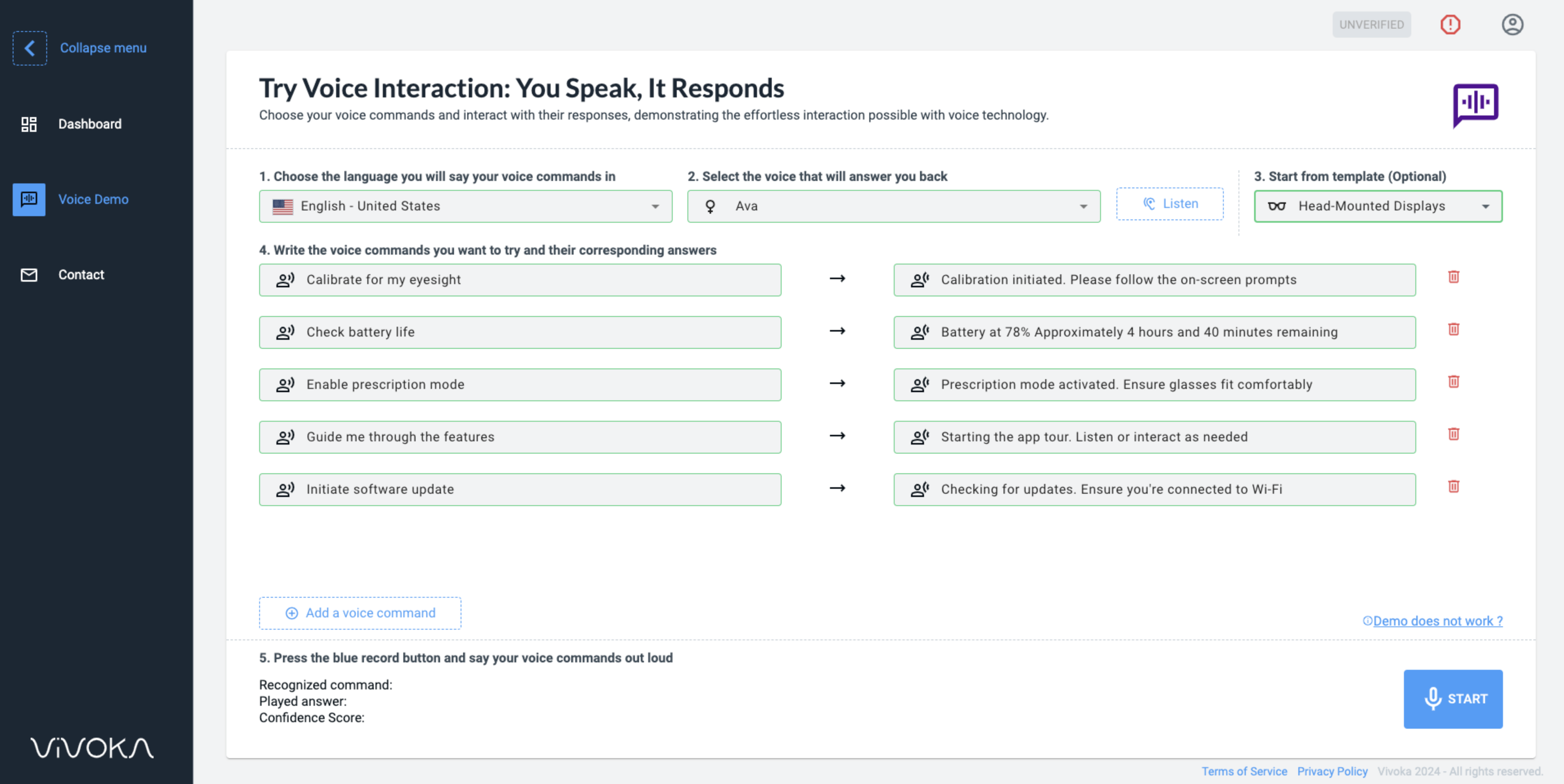

Natural Language Processing has existed for a long time now and has allowed for huge advances in computational sciences. Indeed, current conversational AIs such as ChatGPT, Siri, Google Assistant and so much more have all been able to answer your questions and are getting better and better each day thanks to the amount of data they process. But NLP isn’t only designed for Cloud. Indeed, Vivoka provides companies with offline voice technology, allowing to build a completely embedded NLP system preserving data, whether it is the one of your final customers or the ones of your workforce. Interested in creating a custom voice assistant to streamline your processes and improve your productivity? Contact us!

More about natural Language Processing

Enhancing Natural Language Processing with Advanced Neural Models and Machine Learning

As we continue to evolve our understanding and capabilities in natural language processing, the integration of large-scale neural models and advanced machine learning techniques is enhancing our ability to perform complex tasks such as speech recognition, text analysis, and entity identification. These tools not only expand the breadth of applications accessible to businesses and customers but also deepen our comprehension of language and its nuances. For example, by leveraging expansive data sets and sophisticated models, NLP can parse the intricacies of human speech, from recognizing the meaning behind a sentence to understanding the context within which a word is used. This continual progression in NLP not only refines our interaction with technology but also bridges the gap between human cognitive processes and machine understanding, paving the way for more intuitive and responsive systems across various domains

Future Developments in Natural Language Processing

As the frontier of natural language processing expands, the future holds promising developments that will further merge human-like understanding with machine efficiency. The next generation of NLP applications will likely incorporate more refined models capable of learning from less structured text and speech, enhancing their capacity for natural interaction without rigid programming constraints. This evolution will enable machines to better grasp the subtleties of language, such as idiomatic expressions and cultural nuances, making them more adept at serving a diverse global audience.

Furthermore, these advancements will provide deeper insights into customer sentiments and behaviors, offering businesses valuable data to tailor their services and products more effectively. The integration of these sophisticated tools into everyday technology is transforming how we interact with the digital world, making it more engaging and aligned with human communication styles.

Enhancing Human-Machine Interactions

As we look towards the future of natural language processing, it’s clear that the integration of advanced neural networks and machine learning will further enhance our ability to interpret and generate human-like text and speech. This ongoing development will not only refine how machines understand and respond to us but will also revolutionize various fields such as healthcare, education, and customer service by providing more accurate and context-aware interactions.

With each advancement, NLP is becoming an indispensable tool in extracting meaningful information from vast amounts of data, enabling more personalized and effective communication. These innovations promise to make our interactions with technology even more seamless and intuitive, ushering in a new era of digital sophistication where machines can understand not just the words we use, but the intent and emotion behind them.