In our previous blog post, we talked about what were the reasons that prevented smart glasses (and overall wearables) from meeting their expected adoption rate. As voice technologies experts, our ability to solve this problem globally is of course limited, otherwise we would be smart glasses nerds…

However, we still strongly believe that voice is one of, if not the most, encouraging way to carry this smart eyewear revolution. To fill this gap in smart glasses knowledge, we have invited AMA’s VP Product & Partnership, Guillaume Campion, a pioneer of assisted reality and workflow management software solutions, to join our thought process on the benefits of voice.

FYI: AMA has been working with us for a couple of years now, they are used to working with different smart glasses manufacturers such as (Vuzix, RealWear, Google Glass, LIvision…) by adding custom software layers to them, as well as introducing embedded voice technologies through Vivoka’s Voice Development Kit.

Technology changes its shape through wearables… so does its user guide!

“Smart glasses are basically computers that we put on our heads. But they are not designed to be used with our hands since they are placed near 4 of the 5 other human senses : hearing, vision and voice.”

Indeed, what Guillaume is smartly saying is that we can’t continue thinking about our technologies with the same methods as we’ve come up with since. Wearing computers of course requires new use concepts. In Sci-fi, people always use technology that does not resemble a computer with gestures, sight, thought and voice.

Only contradiction that we have comes from the dragon ball z series and the “power scanner” that strangely requires to push a button. It was maybe too soon for people to even consider that voice was an option, don’t forget that DBZ first came out in 1989!

What are the main benefits of using voice with smart glasses?

Real hands-free navigation, what smart glasses are basically designed to do

Nowadays, the most common ways of navigation inside our devices are touchscreen or buttons. Even the youngest have more than interiorated this. But in some cases, including the ones related to smart glasses, having a full availability of both the hands is close to mandatory.

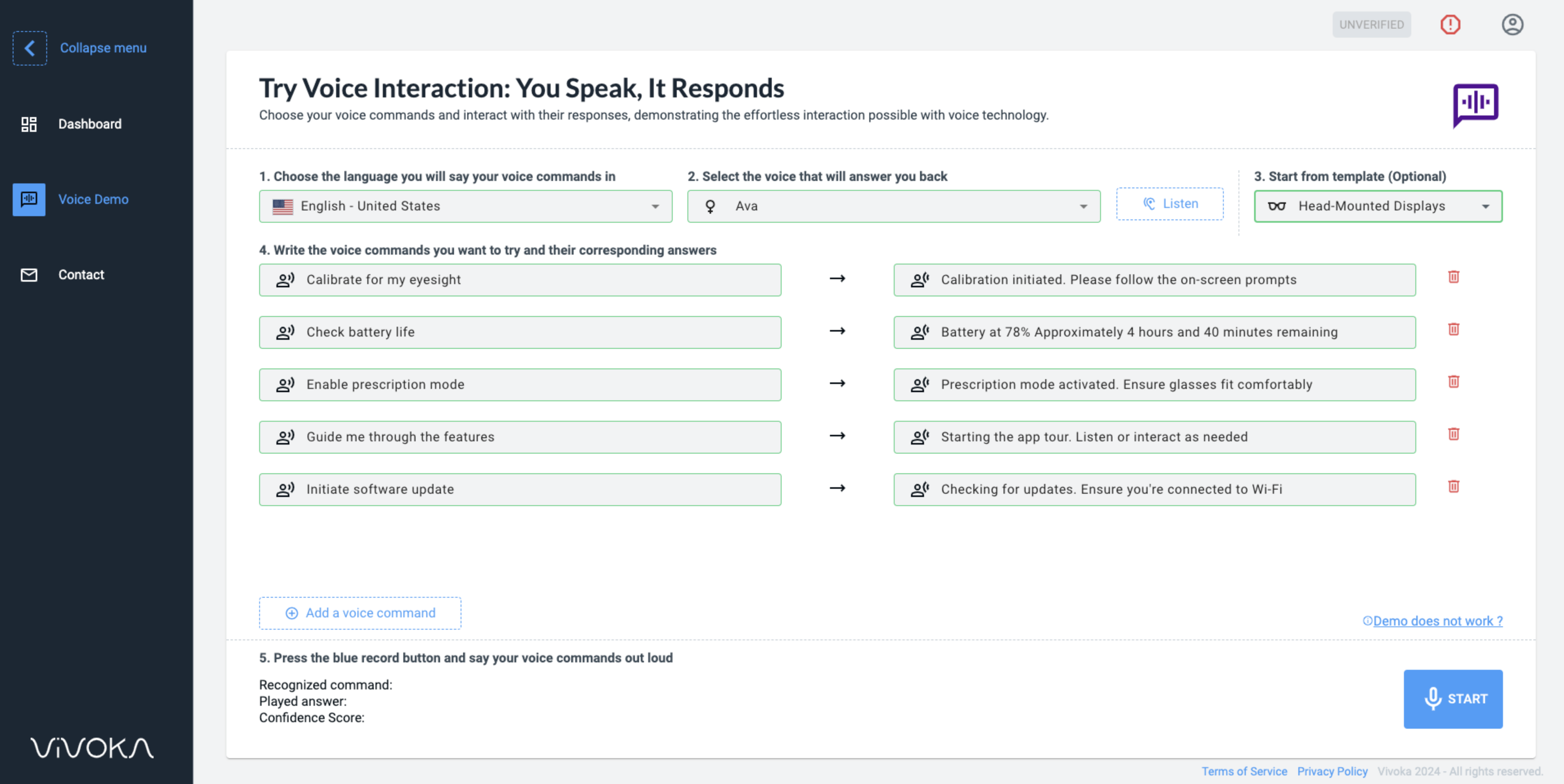

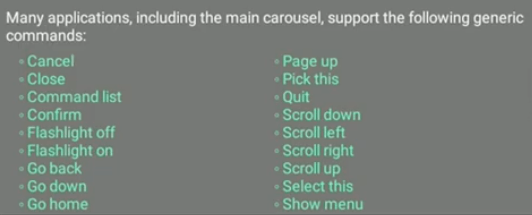

Voice recognition grants a 100% hands-free navigation and makes it faster for users to navigate. If you have already tried smart glasses of any kind, you know what we are talking about. Devices such as smart eyewear are really small and head-mounted. They don’t provide much space for designers to embed buttons or large touchpads that can be easily and accurately used. We created a short video to compare the same workflow, with voice and with touch.

Also, let’s say you want to take notes, send a message or an email. How can you possibly write medium to long texts without relying on external devices such as smartphones? It is absolutely complicated to do so. Voice provides the ability to communicate text information through dictation or transcription on such “incompatible” device. On top of that, humans dictate faster than they write: 150 words vs. only 60 when writing or typing…

Being able to keep hands totally free when using the device is necessary for professional environments. It can be even more important depending on the areas we are talking about. Telemedicine, factory workers, remote operators… All of these use cases involve professionals that are related to manual work which requires accuracy and expertise. Imagine being a surgeon, having to switch between different applications:

- checking vital signs;

- activating the flash light;

- recording;

- and at the same time holding scalpels and doing surgery on a patient.

This doesn’t seem really compatible with the level of safety that is required.

Voice-based wearables are improving safety and efficiency

By addressing the device’s navigation principles with a voice-based and hands-free alternative, smart glasses and overall wearables are improving in terms of safety. When talking with Guillaume, we agreed on how technology can be distracting because we need a certain amount of focus to properly make it work. AMA and Vivoka are used to work with different kind of industries. These kind of areas are crawling under a ton of safety guidelines, norms and regulations. To meet this purpose, we found that smart glasses were really increasing safety in working areas just because they don’t come in your way when working. They are really assisting the user, by the features they are providing but also by the way people use them, through voice and vision.

If we imagine what the future (not so distant to be honest) could be, having text-to-speech technologies telling users what to do in the shape of work instructions or through image recognition saying the safety requirements for the device the user is looking at, would be game changing features.

These hearing-based features could 100% complete what vision and voice are currently providing, to shape a cognitive interactions trio in some sort.

Voice is easing the way users are adopting and getting familiar with smart glasses and wearables

“The thing is that with voice and the way voice commands are displayed on the screen, any user that can read and speak (according to the device’s language support at least) is able to quickly get familiar with the interface and get things done.”

Every benefit in terms of ergonomics, efficiency, speed or safety are joining forces to help with something that can define a device’s future: its adoption by users. This is something important for everyone. But it is becoming even more crucial for companies that want to make smart glasses a professional tool for their collaborators. Resistance to change is something real in any company and organization. If users don’t refuse something they can’t easily understand or use, they will at least take time and require a lot of effort to get things going smoothly.

Remember when people wanted to switch from their good ol’ sheet of paper to type on touchpads… Smart glasses can seem more disruptive but basically they are facing the same situation than their predecessors. And in fact, some tech democratization has already been done before!

Voice in terms of usage adoption is great. Based on commands that are designed to be as natural and intuitive as possible (thanks to Natural Language Understanding for instance), there are not many barriers that prevent users from quickly and easily getting used to it. Following the course to HMI (Human-Machine Interface), Voice User Experience (VUX) creates guidelines to make the commands and actions as “human” as possible so users can really interact with technology like they would do with someone else.

Some of the voice commands that can be used on Vuzix’s Smart Glasses (M400 Series)

Voice in Wearables should not be considered as the only solution, it should always completes others

Even though we came to the conclusion that using voice to interact with head-mounted technologies (and all sorts of wearables) is one of the most legit solutions, it is still more complex than it seems. And we are not only talking about technologies. The answer to that mainly depends on who is using the device, where and what for.

Who is using the device?

Indeed, we are not all equal. People can have speech impairments that prevent them from using any voice-based features. Either because they can’t speak or because the way they are speaking is not taken into account by speech engines. Also voice recognition engines still don’t cover all the languages or dialects in the world… There are still some people that will not have their mother tongue recognized…

Where is it being used?

“You don’t want everyone to know what you are doing or looking for. Privacy is a big concern in most situations… This is also the reason why embedded speech recognition is that much asked for. Everything stays in the device, no data transferred, no unwanted recordings…”

Noise levels can also have an impact on voice technologies. There are many solutions (specific microphones, noise-proof models…) to meet these problems but sometimes, too much noise is… too much noise. But to reach this situation you have to go for 100 or so decibels. During CES we even had demonstrations such as Vuzix’s smart glasses that worked perfectly regardless of the crowd!

What is it used for?

In the same way some people are not able to speak, some are not able to hear well or hear at all which can hardly make text-to-speech solutions to be exclusive in this kind of devices. Voice biometrics should be paired with other authentication methods, for safety and for convenience as well. In not all the cases can a device entirely rely on voice commands. Device’s interactions should be multimodal to fit with every persona they are confronted to.

“There is also a thing linked with what smart glasses are used for. Companies today are looking for solutions that secure their data and overall confidentiality. But embedded technologies cannot compete with the Cloud when the features we ask for are becoming too futuristic. Companies should really question themselves to figure out the balance between resilience and capacities.”

Did you know we made a webinar on this specific topic?

Here’s a quick trailer of the 30-minutes discussion that our experts from Vuzix, AMA and Vivoka had in late March.

You want to watch the whole conference? Click here to get access!

Quick FAQ: Demystifying the use of voice technologies by tackling common objections

“It is great for the smart glass business to have such tools as the Voice Development Kit (VDK) as it makes it easy for smart glass manufacturer to have fantastic UX based on voice commands. And thanks to Realwear and other players we know how this is a real game changer for user adoption.”

Let’s see how the Voice Development Kit that we are providing, and currently powers smart glasses (or in the course of doing so with other references…) is competing with common objections:

“Embedded speech technologies lack language support.”

- We support more than 40 different languages for voice commands and 60+ languages that can be synthetized.

“Noise is ruining audio quality, such as speech recognition.”

- We did several full demonstrations of the Vuzix smart glasses during CES (the world’s largest innovation show), and the device responded perfectly despite the noise. All you need is efficient hardware that matches the software requirements.

“Speech recognition is not accurate.”

- We prefer to go for specialized grammar-based commands that are made to perfectly recognize expected commands.

“You always need an internet connection, to have a smooth experience.”

- Embedded technologies make the device resilient and autonomous from the internet. Voice anywhere, anytime.

“Voice assistants are always listening and recording.”

- Data privacy can be secured with on-device processing.

“Voice technologies are expensive.”

- If we compare it with “per request” or subscription business models, yes it is expensive. When going for on-device processing, you can find one-time licensing alternatives like we can provide.

“We don’t have the technical team nor the skills to develop voice capacities.”